AWS IoT Core: Building Connected Device Solutions

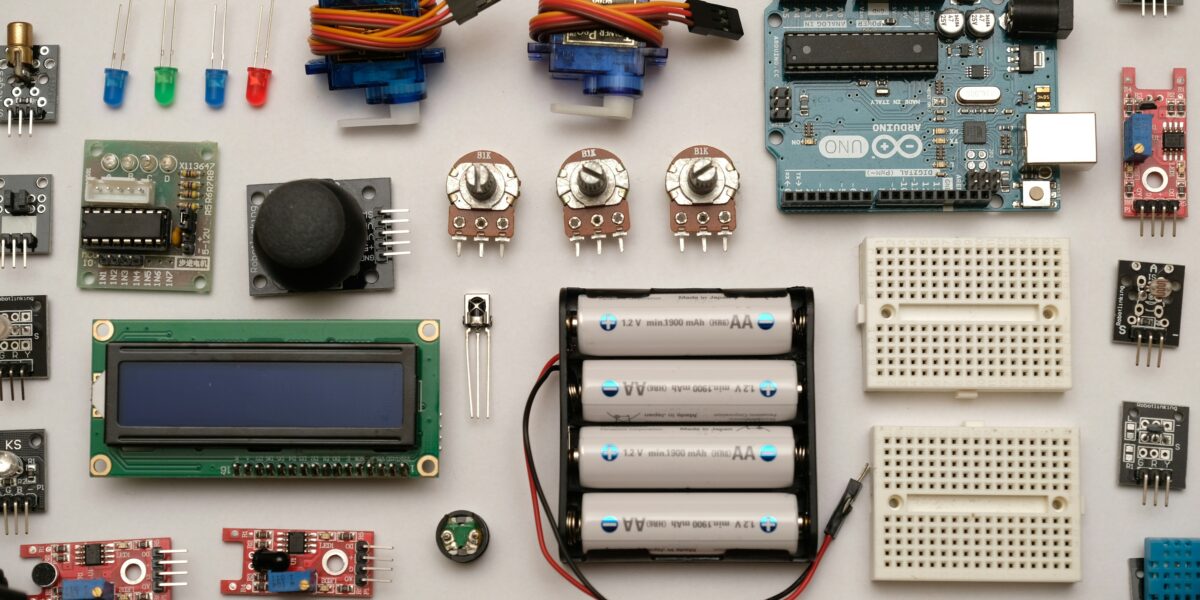

AWS IoT Core has gotten complicated with all the buzzwords and marketing fluff flying around. As someone who spent the better part of two years wiring up connected devices for a logistics company, I learned everything there is to know about this service the hard way — through broken MQTT connections at 2 AM and certificates that expired at the worst possible moment. Today, I will share it all with you.

Here is the thing about IoT on AWS that nobody tells you upfront: the console makes it look simple, but the real complexity lives in the authentication layer and message routing. I remember my first attempt at connecting a fleet of temperature sensors. Took me three days just to get the certificates sorted out. Looking back, I was overcomplicating things, but that learning curve is real and I want to help you avoid the same pitfalls.

Probably should have led with this section, honestly. Before you touch a single line of code, you need to understand how the core architecture fits together. IoT Core is not just one service — it is a collection of components that work in concert. The Device Gateway handles your connections, the Message Broker routes everything, and the Rules Engine decides what happens with each message that comes through. Miss any one of these pieces and you will spend hours debugging what turns out to be a simple architectural misunderstanding.

IoT Core Architecture

Let me break down the key building blocks. When I first started with IoT Core, I thought of it like a simple message queue. Turns out, it is way more nuanced than that. Each component serves a distinct purpose, and understanding this separation of concerns will save you a ton of debugging time down the road.

| Component | Purpose | Key Features |

|---|---|---|

| Device Gateway | Entry point for device connections | MQTT, HTTPS, WebSocket support |

| Message Broker | Pub/sub message routing | Topic-based filtering, QoS levels |

| Rules Engine | Process and route messages | SQL-like queries, 20+ action types |

| Device Shadow | Virtual device state | Offline sync, desired vs. reported state |

| Registry | Device identity management | Thing types, groups, attributes |

That is what makes IoT Core endearing to us cloud engineers — everything has its place and there is a clear separation of concerns. The Device Gateway handles connection protocols so your broker does not have to worry about transport details. The Message Broker focuses purely on routing. The Rules Engine takes care of processing logic. It is genuinely well-architected, which is something I cannot always say about AWS services.

One thing I wish someone had told me earlier: the Registry is more powerful than it looks at first glance. You can organize your devices into thing types and groups, then apply policies at the group level. When you are managing hundreds or thousands of devices, that hierarchical organization becomes absolutely essential. Trust me on this one — I tried managing a flat list of 500 sensors once and it was a nightmare.

Device Authentication

Security in IoT is not optional, and AWS takes this seriously. IoT Core gives you several authentication options, but I will be honest — for production workloads, X.509 certificates are really the only way to go. I have seen teams try to use custom authorizers or Cognito tokens for device authentication, and it always ends up being more trouble than it is worth.

X.509 Certificates (Recommended)

Each device gets its own unique certificate for mutual TLS authentication. This is the part where most people trip up, myself included. The process itself is straightforward once you have done it a few times, but the first time through feels like you are juggling five different AWS CLI commands at once. Here is the workflow I follow every single time I provision a new device:

# Create a thing and certificates using AWS CLI

# 1. Create a thing

aws iot create-thing --thing-name "sensor-001"

# 2. Create keys and certificate

aws iot create-keys-and-certificate \

--set-as-active \

--certificate-pem-outfile "cert.pem" \

--public-key-outfile "public.key" \

--private-key-outfile "private.key"

# 3. Attach policy to certificate

aws iot attach-policy \

--policy-name "SensorPolicy" \

--target "arn:aws:iot:us-east-1:123456789:cert/abc123..."

# 4. Attach certificate to thing

aws iot attach-thing-principal \

--thing-name "sensor-001" \

--principal "arn:aws:iot:us-east-1:123456789:cert/abc123..."

A few things to watch out for here. First, save those certificate files somewhere safe immediately — you cannot download the private key again after creation. I learned this the hard way when I accidentally closed my terminal before copying the key. Had to revoke and recreate the whole thing. Second, make sure your policy is actually correct before attaching it. A misconfigured policy will let the device connect but silently drop all messages, which is incredibly frustrating to debug.

IoT Policy Example

Policies in IoT Core follow the same general pattern as IAM policies, but with IoT-specific actions and resources. The key difference is that you can use thing name variables in your resource ARNs, which lets you write one policy that dynamically scopes permissions per device. This was a game-changer for me — instead of creating a unique policy for each sensor, I could use a single template policy that references the connecting thing name.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "iot:Connect",

"Resource": "arn:aws:iot:us-east-1:*:client/${iot:Connection.Thing.ThingName}"

},

{

"Effect": "Allow",

"Action": "iot:Publish",

"Resource": "arn:aws:iot:us-east-1:*:topic/sensors/${iot:Connection.Thing.ThingName}/*"

},

{

"Effect": "Allow",

"Action": "iot:Subscribe",

"Resource": "arn:aws:iot:us-east-1:*:topicfilter/commands/${iot:Connection.Thing.ThingName}"

}

]

}

Notice how I am using the iot:Connection.Thing.ThingName variable in the resource ARNs. This means each device can only publish to its own topic and subscribe to its own command channel. It is a simple but effective way to implement least-privilege access without drowning in individual policies. I cannot stress enough how important this pattern is for production deployments.

Publishing Data from Devices

Alright, now we get to the fun part — actually sending data from devices to the cloud. The AWS IoT Device SDK for Python makes this surprisingly painless. I have used both the v1 and v2 SDKs, and v2 is a massive improvement in terms of reliability and reconnection handling. If you are starting fresh, go straight to v2 and do not look back.

from awscrt import io, mqtt

from awsiot import mqtt_connection_builder

import json

import time

# Configuration

ENDPOINT = "xxxxxx-ats.iot.us-east-1.amazonaws.com"

CLIENT_ID = "sensor-001"

CERT_PATH = "./certs/cert.pem"

KEY_PATH = "./certs/private.key"

CA_PATH = "./certs/AmazonRootCA1.pem"

# Create MQTT connection

mqtt_connection = mqtt_connection_builder.mtls_from_path(

endpoint=ENDPOINT,

cert_filepath=CERT_PATH,

pri_key_filepath=KEY_PATH,

ca_filepath=CA_PATH,

client_id=CLIENT_ID,

clean_session=False,

keep_alive_secs=30

)

# Connect

connect_future = mqtt_connection.connect()

connect_future.result()

print("Connected!")

# Publish sensor data

def publish_telemetry():

while True:

payload = {

"device_id": CLIENT_ID,

"timestamp": int(time.time()),

"temperature": 23.5,

"humidity": 65.2,

"battery": 87

}

mqtt_connection.publish(

topic=f"sensors/{CLIENT_ID}/telemetry",

payload=json.dumps(payload),

qos=mqtt.QoS.AT_LEAST_ONCE

)

print(f"Published: {payload}")

time.sleep(10)

publish_telemetry()

A couple of things worth noting about this code. Setting clean_session to False means the broker will remember your subscriptions if the device disconnects and reconnects. For most sensor use cases, this is exactly what you want. The keep_alive_secs parameter tells the broker to consider the connection dead if it does not hear from the device within that window. I usually set this to 30 seconds for cellular-connected devices and 60 seconds for WiFi devices, but your mileage may vary depending on network conditions.

Also, I always use QoS 1 (AT_LEAST_ONCE) for telemetry data. QoS 0 is fine for high-frequency non-critical data like debug logs, but for anything you actually care about, the guaranteed delivery of QoS 1 is worth the slight overhead. The tradeoff is that QoS 1 messages require an acknowledgment from the broker, which adds a small amount of latency, but in practice I have never seen this be a problem.

IoT Rules Engine

This is where IoT Core really starts to shine. The Rules Engine lets you write SQL-like queries against your incoming message stream and route matching messages to over twenty different AWS services. I think of it as a lightweight stream processor that sits right at the edge of your data pipeline. No need to spin up Kinesis or write Lambda glue code for simple routing scenarios.

# Terraform: Create IoT Rule

resource "aws_iot_topic_rule" "sensor_rule" {

name = "process_sensor_data"

enabled = true

sql = "SELECT * FROM 'sensors/+/telemetry' WHERE temperature > 30"

sql_version = "2016-03-23"

# Send to Lambda for processing

lambda {

function_arn = aws_lambda_function.process_alert.arn

}

# Store in DynamoDB

dynamodb {

table_name = aws_dynamodb_table.sensor_data.name

hash_key_field = "device_id"

hash_key_value = "${device_id}"

range_key_field = "timestamp"

range_key_value = "${timestamp}"

payload_field = "payload"

role_arn = aws_iam_role.iot_rule_role.arn

}

# Archive to S3

s3 {

bucket_name = aws_s3_bucket.sensor_archive.id

key = "telemetry/${device_id}/${timestamp}.json"

role_arn = aws_iam_role.iot_rule_role.arn

}

# Send alert to SNS for high temperature

sns {

target_arn = aws_sns_topic.alerts.arn

role_arn = aws_iam_role.iot_rule_role.arn

message_format = "JSON"

}

}

That Terraform snippet shows what a real-world rule looks like. The SQL query filters for high-temperature readings, and then the rule fans out to four different destinations simultaneously. Lambda handles the alert logic, DynamoDB stores the reading for real-time dashboards, S3 archives everything for long-term analysis, and SNS sends notifications. You can accomplish in one rule what would normally require a bunch of glue code and multiple Lambda functions.

One gotcha I ran into: the Rules Engine SQL syntax looks like standard SQL but has some quirks. Nested JSON fields use a dot notation, and you need to use specific functions for things like timestamp formatting. The documentation is decent but not comprehensive — I ended up discovering several useful functions just by experimenting in the test console. Pro tip: always test your rule queries in the IoT console before deploying them with Terraform or CloudFormation.

Device Shadows

Device Shadows are probably my favorite feature in IoT Core, and I think they are massively underutilized. The concept is simple: a shadow is a JSON document that represents the last known state of a device. When a device goes offline, applications can still read the shadow to see what the device was doing before it disconnected, and they can update the desired state so the device picks up changes when it reconnects.

Shadow State Model:

- Reported: State reported by the device — this is what the device says it is actually doing right now

- Desired: State requested by applications — this is what you want the device to do next

- Delta: The difference between desired and reported — this is what the device needs to act on

# Example shadow document

{

"state": {

"desired": {

"power": "on",

"temperature": 22

},

"reported": {

"power": "off",

"temperature": 24

},

"delta": {

"power": "on",

"temperature": 22

}

},

"metadata": { ... },

"version": 12,

"timestamp": 1703520000

}

The delta section is the magic. When your application sets the desired power state to “on” but the device last reported “off”, the shadow automatically generates a delta. Your device firmware subscribes to delta updates and acts on them. It is an elegant pattern that handles the inherently unreliable nature of IoT connectivity without requiring you to build a custom state management layer.

I have used shadows extensively for over-the-air configuration changes. Instead of building a complex command-and-control system, I just update the shadow’s desired state with new configuration values and let each device pick them up at their own pace. Works beautifully for things like adjusting sensor polling intervals or updating firmware version targets across a fleet.

Fleet Provisioning

When you are dealing with more than a handful of devices, manually creating certificates and registering things becomes impractical. Fleet Provisioning automates this entire workflow, and it was a lifesaver when we needed to onboard 2,000 sensors in a warehouse deployment. The basic idea is that devices start with a shared “claim” certificate that gives them just enough permission to register themselves, and then the provisioning process issues them their own unique identity.

- Claim Certificates: A shared bootstrap certificate that only allows the device to call the provisioning APIs. Think of it as a temporary guest pass that gets swapped for a permanent badge during onboarding.

- Provisioning Templates: These define how devices get registered and configured. You can set thing names based on serial numbers, assign devices to groups based on hardware type, and attach specific policies automatically. I usually include custom attributes like firmware version and deployment region in the template.

- Pre-Provisioning Hooks: Lambda functions that validate devices before provisioning completes. This is your last line of defense against unauthorized devices. I always use a hook that checks the device serial number against a database of known hardware — if the serial is not in the list, provisioning gets rejected.

IoT Core Pricing

Pricing is one area where IoT Core genuinely surprised me in a good way. For most small to medium deployments, the costs are negligible. The pay-per-use model means you are not paying for idle capacity, which is perfect for IoT workloads that tend to be bursty. Here is a breakdown of what you will actually be charged for:

| Component | Price (US East) | Notes |

|---|---|---|

| Connectivity | $0.08 per million minutes | Connected device time |

| Messaging | $1.00 per million messages | First 1B messages/month |

| Rules Engine | $0.15 per million rules triggered | Per rule execution |

| Device Shadow | $1.25 per million operations | Update/get operations |

For context, a deployment of 1,000 sensors sending one message per minute would cost roughly $43 per month for messaging alone. Add in connectivity and shadow operations, and you are still well under $100. Compare that to running your own MQTT broker on EC2 — where you are paying for compute whether the devices are talking or not — and the managed service is a no-brainer for most use cases. The only time self-hosting makes sense is if you have extremely high message volumes and predictable traffic patterns.

Best Practices

After spending years working with IoT Core across different projects, these are the practices I always follow. Some of these I learned from the documentation, but most came from painful production incidents that I would rather not repeat.

- Use unique certificates per device — Never share certificates across devices. If one device gets compromised, you can revoke its certificate without affecting the rest of your fleet. I have seen teams share a single certificate across hundreds of devices “to keep things simple,” and it always ends in tears when they need to do a security rotation.

- Implement reconnection logic — Network failures are not a matter of if but when. The v2 SDK handles reconnection automatically, but make sure you test your application’s behavior during prolonged outages. I always add a local data buffer so the device can cache readings while disconnected and publish them once connectivity is restored.

- Use QoS 1 for critical data — This ensures at-least-once delivery, meaning the broker will retry if the publish acknowledgment gets lost. For non-critical telemetry like debug logs, QoS 0 is fine and reduces overhead.

- Partition topics wisely — Use hierarchical topics like sensors/location/device/data-type. This makes it easy to write Rules Engine queries that filter on specific locations or device types without processing every single message. Flat topic structures might seem simpler, but they become unmanageable as your fleet grows.

- Monitor with CloudWatch — Track connection counts, message rates, and rule failures religiously. Set up alarms for unusual patterns. I once caught a firmware bug that was causing devices to reconnect every 30 seconds — the CloudWatch connection metric spiked and alerted us before it became a real problem.

Further Reading

If you want to go deeper, here are the resources I found most useful when I was ramping up on IoT Core. The official developer guide is thorough but can be overwhelming — I recommend starting with the Device SDK documentation and working your way up from there.

Getting Started: My honest recommendation is to skip the console wizard and follow the CLI steps I outlined above. The wizard hides important details about how certificates and policies are linked together, and understanding those relationships will save you hours of debugging later. Start with one device, get comfortable with the provisioning flow, and then look into Fleet Provisioning when you are ready to scale.