Exploring the Concept of a Data Lake

The concept of a data lake emerged as organizations struggled with managing vast amounts of data in diverse formats. Unlike traditional systems, a data lake can handle data in its native format. This avoids the need for pre-defining a schema for data storage.

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

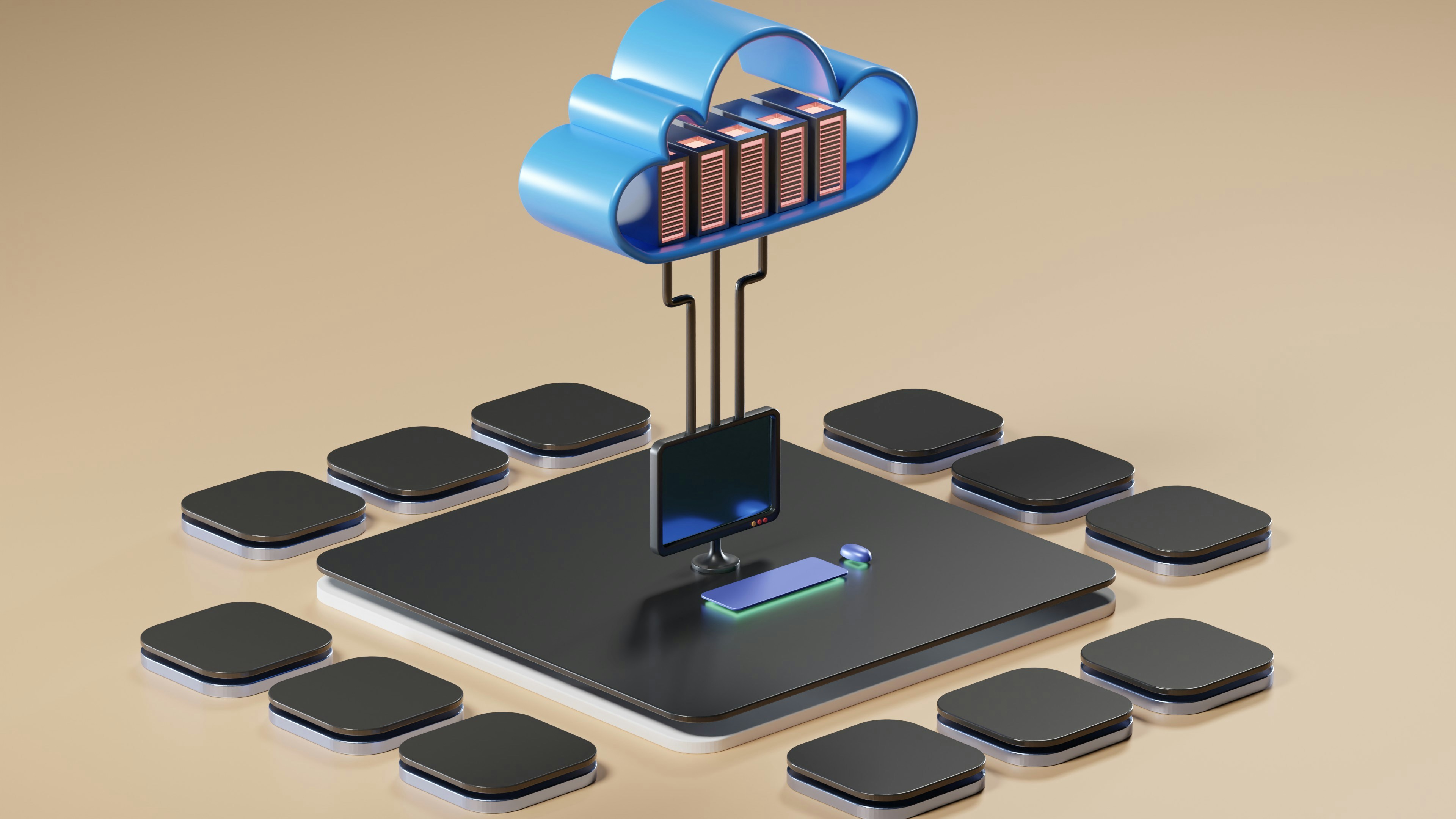

Cloud architecture diagram A data lake is a centralized repository designed to store, process, and secure large amounts of structured, semi-structured, and unstructured data. This concept was coined in contrast to a data warehouse. While a data warehouse requires pre-modeling of data, a data lake allows for flexibility.

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Related AWS Articles

- Transform Your Business with Cloud Server Hosting

- Boost Your Business with Advanced SIP Trunk Solutions

-

Jennifer Walsh

Author & Expert

Senior Cloud Solutions Architect with 12 years of experience in AWS, Azure, and GCP. Jennifer has led enterprise migrations for Fortune 500 companies and holds AWS Solutions Architect Professional and DevOps Engineer certifications. She specializes in serverless architectures, container orchestration, and cloud cost optimization. Previously a senior engineer at AWS Professional Services.

156 ArticlesView All Posts

You Might Also Like